For novel technologies, evidence from pilot projects is an important pillar for pinpointing critical unknown variables as early as possible. The resultant knowledge base is the foundation for an effective early-stage risk assessment.

Systematic Risk Assessment

An effective project risk analysis should center on a clearly defined process for:

- Tracking and understanding the status of process development.

- Listing issues and results to date.

- Defining and rating specific technical risks.

- Developing potential alternatives and mitigation strategies.

A gated process with well-defined evaluation points can be pivotal in deciding whether the project should move forward. This process helps generate a road map for defining the uncertainties inherent to scaling up new technologies or implementing novel processes.

The project risk analysis should encompass the project holistically, including nontechnical issues such as feedstock availability and logistics, product demand and distribution, CAPEX/OPEX targets, government incentives, and financing structure. A new process can be technically viable, meaning it works, but fails on logistic or economic grounds, leading to no money being made. The risk analysis can help address these issues early in development to see that the program is worth the investment.

It is important to note that rigorous risk analysis should not assume that mitigation strategies are capable of balancing every risk factor. Skepticism is healthy; ending a development program due to a fatal flaw is not a failure. Indeed, recognizing this flaw as early as possible in the planning process is the best way to limit unnecessary spending and should be regarded positively in a healthy organization.

Aligning the Right Resources

Engineering experience is an important foundation for performing new technology evaluations. Ideally, the engineering team should offer:

- Diverse, hands-on project experience, including knowledge of development and scale-up, as well as full-scale project execution.

- Understanding of a wide variety of unit operations, which is key for informing equipment selection and system design while incorporating lessons learned from existing technologies.

- A technology-agnostic approach backed by cross-functional knowledge and experience to facilitate collaboration and avoid premature or misguided selection of a particular technological approach or set of unit operations.

- Strong process simulation capabilities.

Beyond these fundamental capabilities, the engineering team should understand when more specialized skills will be needed, such as computational fluid dynamics (CFD) modeling, development of phase equilibria and/or reaction thermochemistry data, and reactive chemical relief evaluation, like reaction calorimetry coupled with Design Institute for Emergency Relief Systems (DIERS) relief methodology. For example, CFD modeling can be helpful in understanding multiphase fluid flow through a chemical reactor. Reaction calorimetry may be required to support safety system design for exothermic chemical reactions.

Beyond these engineering resources, broader management knowledge helps in forecasting CAPEX/OPEX, exploring feed and product logistics, conducting market research to understand target buyers and competitive financial targets, and locating funding sources for developing new technologies.

Avoiding Internal Bias in New Technology Evaluation

A cross-functional team helps avoid internal bias toward particular technological approaches. Limited experience with alternative approaches, engineers’ natural pride of ownership, and desire to successfully sell the original design concept may limit the scope of the technology evaluation process. In many cases, this bias includes a narrow focus on known technologies and unit operations within the same industry — even when other industries have a long history of solving closely related engineering challenges using other methods.

To avoid these issues, frequent collaboration to reassess all available information and maintain a healthy skepticism is important. Novel projects demand new knowledge, and project teams must be willing to learn from relevant sister industries and borrow solutions that are already proven. If other industries have employed a similar technology or a different technology in a similar application, their hard-won knowledge should not be ignored for the sake of comfort with more familiar unit operations.

Cost Estimation

Accurate cost estimates are a key pillar for evaluating the feasibility of new processes. As such, they should be approached from several perspectives as soon as a base-case design concept is available. A comprehensive bottom-up CAPEX cost estimate should be developed for the base-case design. In parallel, a full economic model should be developed to provide an understanding of the balance required between product margin and CAPEX/OPEX costs to yield an economically viable project. The economic model can then inform the technical team with CAPEX and OPEX targets and guide decisions related to trade-offs between project scope, feedstock costs and OPEX costs. For example, it may be warranted to accept a lower product margin because the purchase of a more expensive, higher-quality feedstock allows a significant reduction in CAPEX.

CAPEX costs may easily be underestimated in the early phases due to outdated cost data and unrealistic installed-cost factors. Incomplete scope development may also lead to missed cost drivers such as:

- Ancillary process systems

- Feed/product treatment and purification

- Waste disposal

- Wastewater treatment

- Utility systems

- Logistics and storage

- Site development, including construction of roads, rail, buildings and other infrastructure

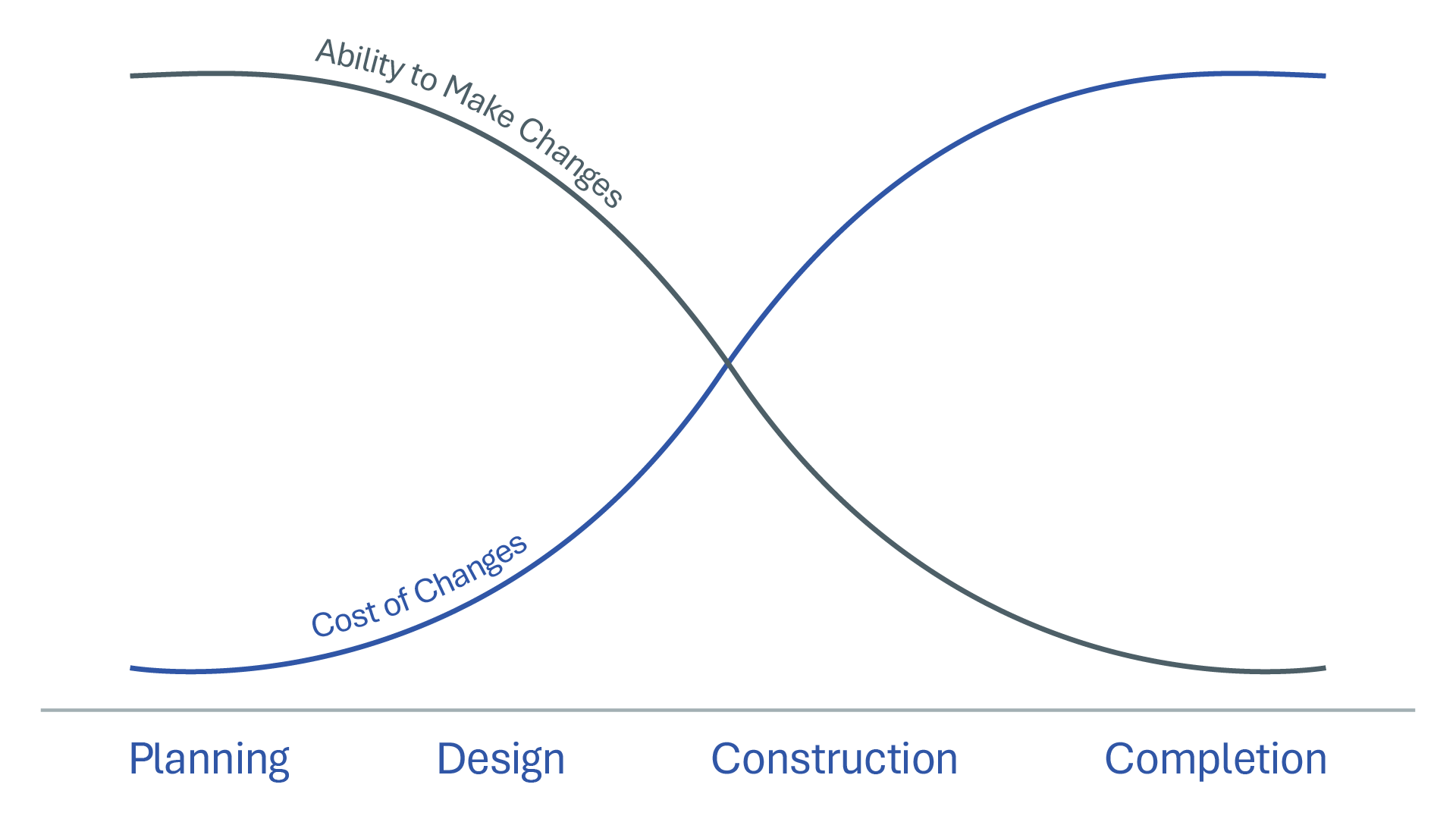

Developing a robust, holistic cost estimate as early as possible is critical due to the dynamics shown by the Cost Influence Curve, illustrated in Figure 2. Later in the development cycle, more and more costs become effectively locked in. The earlier that key parameters can be defined, the greater the opportunity for effective cost control. At a strategic level, more robust cost estimates early in the planning stage will help decision-makers better allocate limited capital.