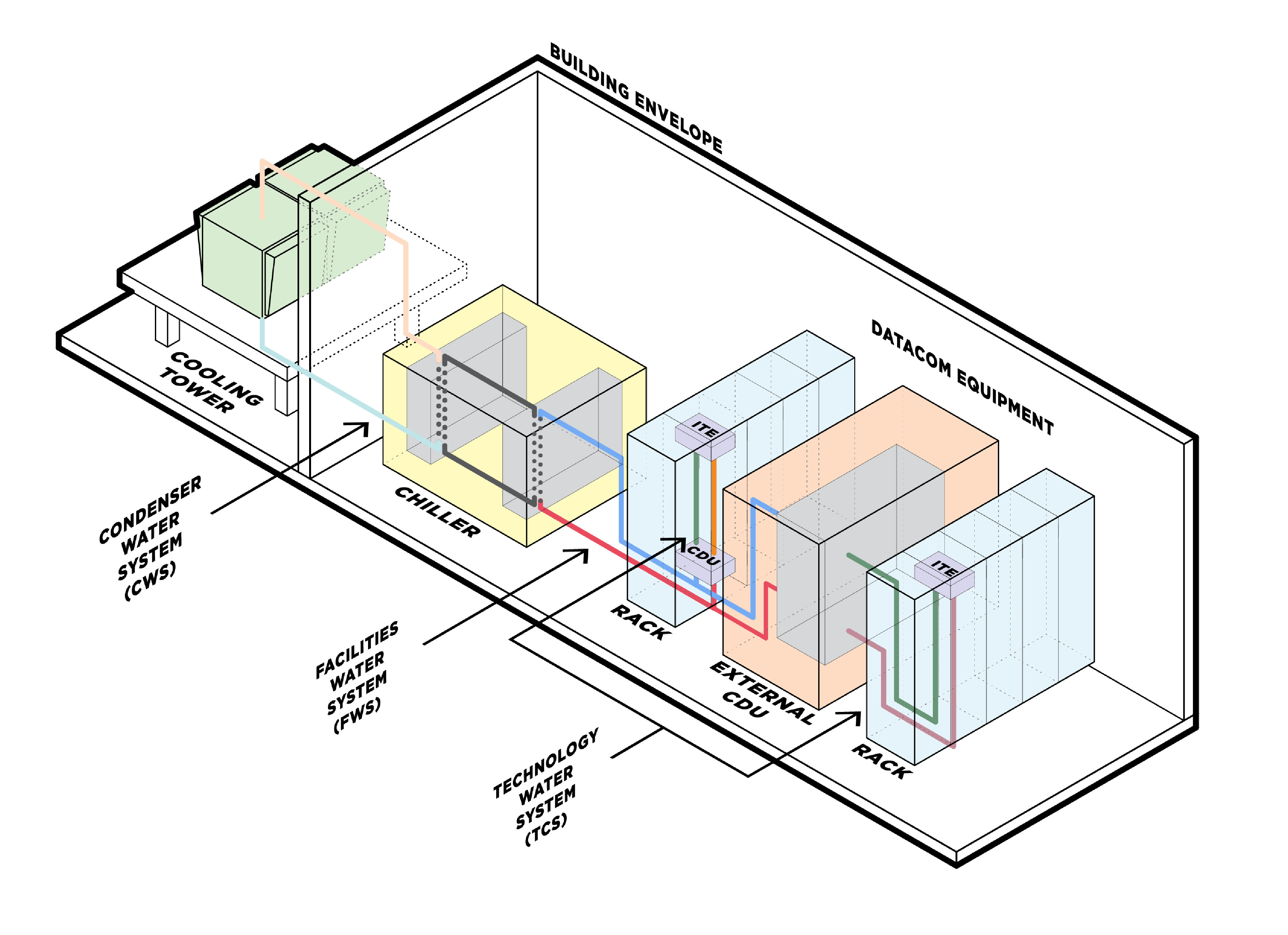

Liquid cooling involves the use of fluids on the white space floor, which has long been verboten or only permitted with containment and leak detection systems in place. However, there’s no way around it with liquid cooling. The liquid-cooled environment is one of piping, cooling distribution units, a form of liquid heat exchange/sync with the ITE, liquid tight hoses, drip-proof connections, and the controls and valving to accompany the solution.

The densification of ITE has created a thermal footprint that requires a large volume of fluid being served directly to the ITE that is unique to the liquid-cooled market. This volume of fluid in the white space should prompt a conversation about the owner’s perception of or tolerance of risk. There are options in fluid choices to complement an owner’s perspective. Dielectric fluids, engineered fluids and glycol solutions are all common heat transfer fluids found in the technology cooling systems that serve liquid-cooled ITE.

One may be tempted to take facility water directly to the ITE, but this is commonly avoided for a few reasons. First, the chip sets require a much higher level of fluid purity than commonly found in traditional facility water systems. The other main reason to avoid this is to limit the potential volume of water per circuit, such that if something were to happen a smaller volume would have to be cleaned up.

Regardless of the approach chosen for liquid cooling, TCS fluids must dissipate their heat to a data center’s mechanical infrastructure, which is typically accomplished in the form of liquid-to-liquid exchange. As a result, it becomes very common to see large facility water system (FWS) piping mains in the mechanical spaces serving the white space and a significant volume of TCS piping located in the white space itself.

The choice of TCS fluid is crucial to maintain throughout the life of the infrastructure. Each TCS fluid has its own unique density, thermal capacity, viscosity and chemistry. If the fluid changes during the infrastructure’s lifetime, changes to the rate of heat removal, CDU components and facility components should be anticipated.

There is an appropriate concern in the data center community about water consumption and water scarcity, with some jurisdictions prohibiting data centers from consuming any potable water whatsoever. The most accepted metric for measuring this is water usage effectiveness (WUE), which can be substantial in traditional air-cooled data centers that employ evaporative cooling, which typically consume millions of gallons of water per megawatt per year.

By transitioning to liquid cooling, the opportunity presents itself to drive the WUE to zero. The WUE of a liquid-cooled data center depends on the final source of heat rejection. For example, if dry coolers can be used for all hours in a specific climate, the WUE trends to zero.